Adventures of launching a small tech company and trying to build it correctly from the ground up

I want to start out by saying that I’m not a writer by nature. I’m very detailed and extremely technical. This often gets in the way of my ability to communicate with people that are not as deep down the technical rabbit hole as I tend to go. Despite that, I want to improve on my communications as a means to help potential customers, fellow investors, and tech enthusiasts understand what shenanigans I’m up to (I promise, they’re not evil shenanigans).

Ok, so by the clip above, I’m sure you’ll soon understand just how often I speak in memes, movie references, tv show quotes, historical references, and many other fun/funny references to what’s currently going around. If you follow me on twitter, you already know this. Also, I don’t recommend following me on twitter, it’ll make your brain hurt (it makes mine hurt, and I write/re-tweet/quote-tweet and like the stuff).

Now that the silliness is out of the way, let’s get to the meat and potatoes (there will still be fun references along the way, if I can make them, but I won’t shoehorn them in). While I’m not going to divulge my life story in this article, I will give enough background so that people understand that when I’m speaking as an authority, I actually do know what I’m talking about. From the fall of 2013 through April 2015, I worked at Rackspace ($RXT and was $RAX when I worked there). I then landed a job at Amazon Web Services ($AMZN) and started Apr. 13, 2015, where I worked until February 28th of this year (2022), when I decided to launch my own company and work for myself.

At Rackspace, I was a Linux Administrator and earned my RHCSA and RHCE (RedHat Certified Systems Administrator and Engineer, respectively). Those certifications expired in 2018 as I didn’t have a need/desire to renew. At Amazon, I started as a cloud support engineer (CSE, a glorified Linux Systems Admin/Eng…but hands off). It was essentially an advisory role to customers with less risk. I was also a SME for EC2 Linux when I was in premium support. In 2018, I made an internal move to EC2 and became a Systems Development Engineer where my jobs functions were to look for systemic problems within the EC2 fleet leading to server failures (ie. “dirty” reboots) as I worked on an EC2 quality team (Sadly, I can’t use the team name as it uses a non-standard industry term that’s considered NDA and it just confuses people). The abbreviation for my team, however, was DQ. Another function was writing software for reporting failures as a failure rate visible all the way up to AWS executives, which was reported bi-weekly (every 2 weeks, not twice per week). I’d be glad to discuss in more detail in person/email/etc. if someone is interested (without violating NDA stuff) and may write an article in the future about some of that work generalized. Suffice it to say, I’m an expert on highly available infrastructure, networking, Linux, and technology systems. I also have code out on github from when I was still in support and wrote some python to help customers with the migration from shorter ec2 resource ids to the 17-character longer-id format here.

Enough of the background and credentials, time to talk about setting up my company for success, now and in the future. All businesses, large or small, need certain foundational technologies to support normal business operations:

email

website

authentication

authorization

file/document storage

accounting software

sales software (point of sale, invoicing, etc.)

some level of networking (depends on scale and need)

internet

method to accept payment and pay bills (bank, cc processor, crypto/bitcoin wallet, wire transfer, cash, check)

I’m sure I’m leaving some out in the list, these are just some considerations businesses need to make when starting up their business, aside from needing some kind of product/service to sell in the market. I’m leaving off marketing/advertising, because I have strong opinions on advertising, which I’ve talked about ad nauseum on my twitter account. There’s a lot of work that goes into setting up a website (if you’re doing your own site, with your own design, and self-hosting), running email, creating authentication+authorization procedures and systems, and the other systems that need to support the business. Often, businesses will use a service like google apps or microsoft products to achieve these goals as that cuts down on the complexity and need to have someone specialized that can do such work. Managing all of those systems by hand requires an administrator with either specific knowledge or ability to quickly learn how to set that up.

One thing businesses have learned is that people are capital-intensive. Hiring IT staff drains useful capital that could be spent on product/service development, additional staffing to support product/service offering, HR, Accountants, etc. As a result, many will offload the work to a combination of automation and pre-built services (such as google apps). Automation, however, in larger companies that can afford some IT staffing and want to roll their own technology stack lean on automation to minimize those costs when out-of-the-box solutions don’t work for them. As someone that is a DIY-er, I prefer to build things myself because I know that I’ll build them correctly as I don’t trust other companies to get it correct. So, I have set about setting up key portions of my underlying business to manage internal services automatically, so that I can focus on other tasks, rather than spending hours updating servers, installing and configuring complex software, and tediously deploying software to development environments to test, then to production environments when completed.

I have a very complex infrastructure. I run my own home-spun router, network switch configurations, server cluster, server that builds servers with an OS and default configurations, dashboards, website on a webserver in AWS, password manager server, and ethereum validators. As a result, I clearly need to find a way to manage all of this quickly and efficiently as I’m the only person working on these pieces of the business puzzle at this time. I’ve given myself plenty of time to build these things, but the more I automate, the less I have to think about the work I’m doing down the road (build for success now, rather than panick patch down the road). Thus, I began my journey into docker, docker-compose, and creating secure remote access (so I can also take vacation or work remotely as I’m doing as I write this article).

Docker and docker-compose allow you to build small services that you can deploy and stitch together via other tools to automatically scale infrastructure and/or move those services to a new provider should you decide you don’t want to self-host or if you don’t like the provider you are on (ie. lets say you got tired of google cloud, aws, azure, or digital ocean, moving is easier with docker and docker compose). I began building numerous internal services in my spare time about a year ago using docker-compose as the technology was increasingly interesting to me, but my exposure to the technology was limited because AWS has their own custom internal stuff. Also, I wanted to automate all the things at home as a precursor to building something a bit more of an open-box that anyone could pick up and deploy quickly after I built a specific one for my own needs.

So, I set off building a docker-compose file with a bunch of services like plex (media server), steamcache-dns (dns caching/forwarding), lancache (for http caching things like games, windows updates, and more), and more. I already was running these services, but I had them via direct docker command-line actions. I am a Linux nerd, after all, so I tend to default to the command-line when proving something out or running a service. So, I mainly needed to convert command-line actions into a particular file type that I could start/stop with simpler commands and would automatically build new ones as they were added. That still only made them usable at home. To expand this proof-of-concept to my business, I needed something that would allow me to expose these services (which were inherently NOT secure) in a secure manner (HTTPS/TLS) and from a single entry point (reverse proxy). Enter Traefik.

Whew…took a LONG time to get to this, huh. So I started reading about Traefik and how it works, then decided to implement it with some of my services (things that track my media library for one) and made it to where they were able to be exposed to the internet so I could manage these services remotely. Success! It was pretty easy to set up these services AND get an SSL certificate for them (for free, thanks to LetsEncrypt). However, this is only super-simple when you’re adding labels to docker containers that are already built. It’s a good thing that I built that docker-compose file already and had it set up to be able to launch services for me. All I needed was to add labels and set up Traefik to handle reverse proxy for existing containers, which was rather simple.

The challenge comes in trying to integrate services that I run from the same environment that are part of my core business strategy, namely a grafana dashboard to track service statistics, which is run on an external server, NOT in docker on the same machine/cluster. Until today (September 10, 2022), I had ran into issues trying to set it up. Now that I have an unsecured version of this working, I only need to do a bit more prototyping locally before I’ll have a secured version I can add to my configuration and store in my code repository. I’m still not doing things the “right” way for security as I have hard-coded api credentials from AWS in my docker-compose file, but it’s in a private repository and the scope of those credentials are limited to just route53, which is acceptable for now. When I put this into production and build the final open-source product, it will not contain any credentials.

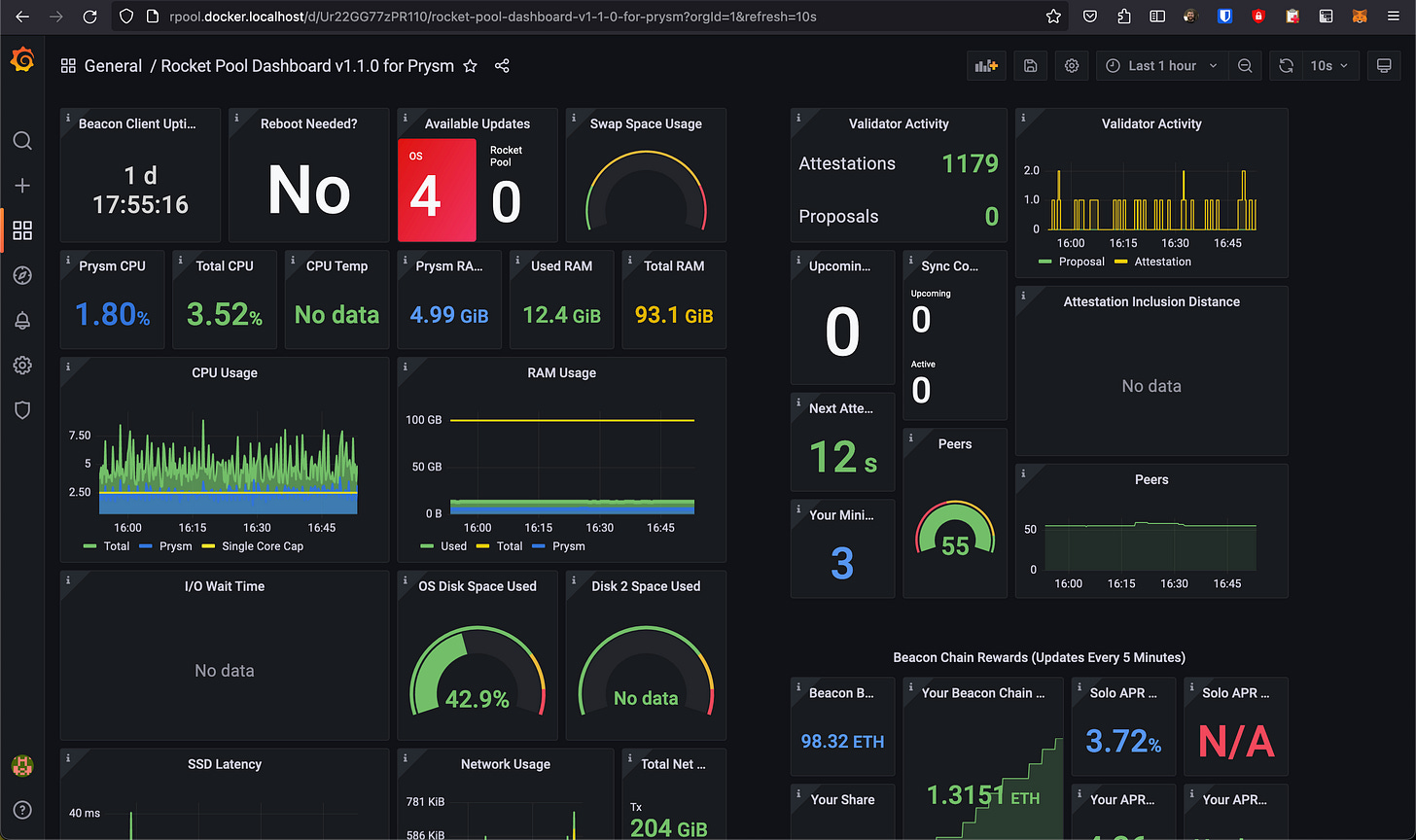

The Traefik reverse proxy, automatic service discovery, and SSL certificate management makes the next step: scaling much easier when you deploy it to a cluster. My cluster of choice (begrudgingly) is kubernetes. In fact, I already have a kubernetes cluster set up in my infrastructure at home, which is intended to run much of my back-office apps and services until my revenue stream supports expansion into a public offering (cloud provider) or my own external hosting solution (like running on an internet exchange, colo). There’s an additional building block I’ve been working on to support that kubernetes cluster build, which is ansible scripting, but I’ll leave that discussion for another time. As the focus is SUPPOSED to be on an intro of myself and Traefik, I’ll try to keep to Traefik. After a decent docker-compose file is set up, you can pass this off to kubernetes to handle deployment of services and I can still point to my external service on another server internally to deliver the dashboarding solution. Below is a view of what this looks like running right now off of my laptop (so, it’s only visible to me, within my home network for now and is directly referenced from my laptop for ease of development purposes).

If you look in the address bar, you’ll see rpool.docker.localhost followed by the dashboard name and a bunch of other metadata. Rocketpool is not something I’m trying to get people to jump into as it’s highly speculative, given it’s part of the ethereum ecosystem and going through the merge. There’s a lot of controversy over ethereum, cryptocurrency, bitcoin, and public blockchain and that’s not the point of what I’m demonstrating here. It is part of my longer-term strategy of building solid business tools, putting them in a generic box (such as dashboarding softwares like grafana, which is the purpose of that screenshot) and enabling a business, such as mine, to quickly build out a number of critical infrastructure tools and make them easily accessible by the correct parties (such as executives that want to view dashboard statistics…something I worked on a bit at AWS before I left and was related to host reboots).

This proves out the concept that I can host traefik on any docker setup (including kubernetes) and set up this service, which can eventually provide secure transmission (keeping the data and interfaces safe), while also allowing me to scale other services with it in a clustered container environment (kubernetes). Now that the proof-of-concept stage is proven, it needs to be finalized.

Next Steps

Now that I’ve completed the proof-of-concept phase. I need to combine my results from the various components to put the entire thing together. Once I’ve completed that, I can break the pieces into their own constituent parts and use those pieces to allow custom building blocks for any business to use (the key goal of my project). That will include: setting up HTTPS redirection, create a credential retrieval system to remove AWS creds from the docker-compose file, expose Traefik and additional services to the internet, setting up Traefik in kubernetes, and migrating what services I can from other hosts into kubernetes to allow scaling up. After I’ve completed some of those tasks, I can repurpose some of my servers to building my next phase project: my private cloud. Each piece of these puzzles is critical in setting up a strong foundation to build new products/services and to scale those for business use. Also, many of these pieces can be made generic to allow any business the ability to download either the repository or a pre-packaged executable that will allow for easy deployment by anyone interested in a sort of “business in a box” or “business starter kit” type of solution.

Final Thoughts

I know this was long, didn’t go into a lot of specific details, and was quite wordy, but I hope this at least piques peoples’ interests enough to consider coming back and reading more. As I continue to write, I’ll improve my writing and get more succinct. My plans are to write one tech-based article per month, then another tech investing/investing article per month. I believe at this time, I will archive most posts after one month, unless they’re tech articles I write with specific guidelines (such as Kernel/OS/Network tuning guides, application configuration guides, etc., which I intend to generally remain in public domain). Articles that are archived, I think, will end up getting a paywall eventually to help further supplement the business (I’m thinking a subscription basis). I am very open to feedback/criticism on my writing as well as open to taking ideas of what to write about. I have more stories to tell and topics to write about than I can even remember off of the top of my head. So, as I get the time to write about them, I will share them. Hopefully, others will find my insights valuable as well. Also, I’ll always take donations in the form of several payment forms (my favorites being $ETH or $BTC), which will be reported fairly as business revenues. You can actually find my coinbase commerce wallet on my website below:

https://www.jbecomputersolutions.com/

Later, I’ll set up subscription info through substack and once I have a few articles and subscribers, I’ll set up a very fair monthly/annual sub price (I’m thinking it will be sub $5/mo…likely $2/mo.) to access archived posts once I have enough that a subscription would be a worthwhile price for readers. Last thing, I promise: I have not yet redesigned the website and I’m not a web/front-end developer. Please note that I will be re-designing it as it is nightmarishly poorly designed. Be kind in regard to the website. It’s more-or-less there are a proof-of-concept and mechanism to get in contact with me (email coming soon).